Agents

- Class intro

- Agents

AI Intro

AI - Machines simulating aspects of intelligence

4 approaches:

- Thinking Humanly

- Acting Humanly

- Thinking Rationally

- Acting Rationally

Quick summary of metrics:

Thinking Approach - Concerned with the thought process and reasoning that brings about decisions

Acting Approach - Concerned with the behavior of AI

Humanly - Measure success as approximating or emulating human performance

Rationality - Measure against an ideal performance measure, called rationality

Thinking Humanly

Cognitive modeling of AI. Develop a theory of cognition and implement it computationally

BUT

- Requires real understanding of human mind

Acting Humanly

“Make machines do things that require intelligence in humans”

- The Turing test for example

BUT - Not all behavior requires intelligence

Thinking Rationally

Aristotle’s version of intelligence. Doing the right thing given it’s information.

Define logical thought process (FOL ex.)

BUT

- Not easy to convert the world into formal logical form, especially with uncertainty

- Hard to generalize to complex problems in practice, e.g. with hundreds of facts

Acting Rationally

DOING the right or useful thing as to achieve the best outcome

Advantages:

- More general than thinking rationally, as correct inference is just one of several mechanisms for achieving rationality

- More amenable to scientific development than the approaches based on human behavior or thought

ChatGPT

How does it work?

- Large-Scale Self-Supervised Pretraining

- Obtain large text data corpus

- Train a giant transformer using unsupervised or self-supervised pretraining tasks

- GPT is pretrained with next token prediction

- Fine-tuning

- Supervised Instruction-based Finetuning

- Reinforcement Learning from Human Feedback (RLHF)

- For GPT, it is finetuned to go from predicting text to following instructions:

- Inference with Prompts

- Template Prompt

- In-Context Learning

- Few-shot: In addition to the task description, the model sees a few examples of the task. No gradient updates are performed

Issues

- Hallucinations

- Lacks complex and rigorous reasoning

- How should it behave and who decides?

- Are LLMs the best path to AI?

Agents

Topics:

- What is an agent?

- What is a rational agent?

- How to build a rational agent?

- PEAS of Task Environments

- Seven Properties of Task Environments

- Five Agent Programs

Agent - Anything that can be viewed as perceiving its environment through sensors and acting upon that environment through actuators

Definitions for agents

Percept - the agent’s perceptual inputs at a particular timestamp

Percept Sequence - a vector P, the complete history of everything the agent has ever perceived.

Action Set -

Agent Function -

Agent Program - a program that implements an agent function

Architecture - the computing device with physical sensors and actuators that the program can run on

Agent = architecture + agent program which implements the agent function

Rational Agents

Rational Agent - Doing the right or useful thing so as to achieve the best outcome

Performance Measure - An objective criterion for success of an agent’s action given the percept sequence

Defining rationality, dependent on:

- Agent’s prior knowledge

- Actions an agent can perform

- Agent’s sequence to data

- Performance measure defining success

i.e. - “A rational agent maximizes its performance measure, given all of it’s knowledge”

Specifying Agents

Task environment - Problem specification for which the agent is a solution

PEAS - How to specify (describe the task environment). Helps to define the problem for designers to assess if rational agents are appropriate.

- Performance measurement

- Environment description

- Actuators (to take actions)

- Sensors (to receive sensory input)

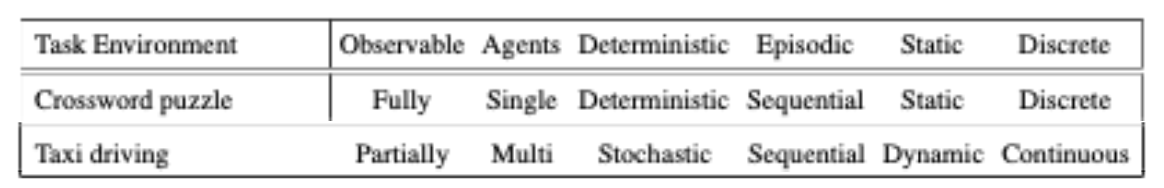

Seven Properties of Task Environments

- States: fully, partially, or not osbervable

- Agency: single or multiple agents

- Successor States: deterministic, non-deterministic, or stochastic

- Agent decisions: episodic or sequential.

Episodic=decisions are one off, does not depend on other decisions

Sequential=current decision could influence future decisions - Environment: Static or dynamic (while agent decides)

- State of the environment and action of the agent over time: discrete or continuous

- Knowledge of environment: known or unknown to the agent

Five Agent Programs

Agent Program: Maps from percepts to actions,

Types:

- Simple reflex agent - Select actions based on the current percept, ignoring the rest of the percept history

- Model-based reflex agent -

- Goal-based reflex agent

- Utility-based agent

- Learning-based agent