📗 -> 05/28/25: ECS170-L25

HMM Extended

Machine Learning Slides

🎤 Vocab

❗ Unit and Larger Context

- What’s Machine Learning

- Types of Machine Learning

- I.I.D Assumption and Generalization

- The Fundamental Tradeoff between Bias and Variance

- Bias and Variance

- Overfitting and Underfitting

- Regularization

- Hyperparameters, Three-fold split, Cross-Validation

- Example of Polynomial Regression

✒️ -> Scratch Notes

Laaate

HMM Extended

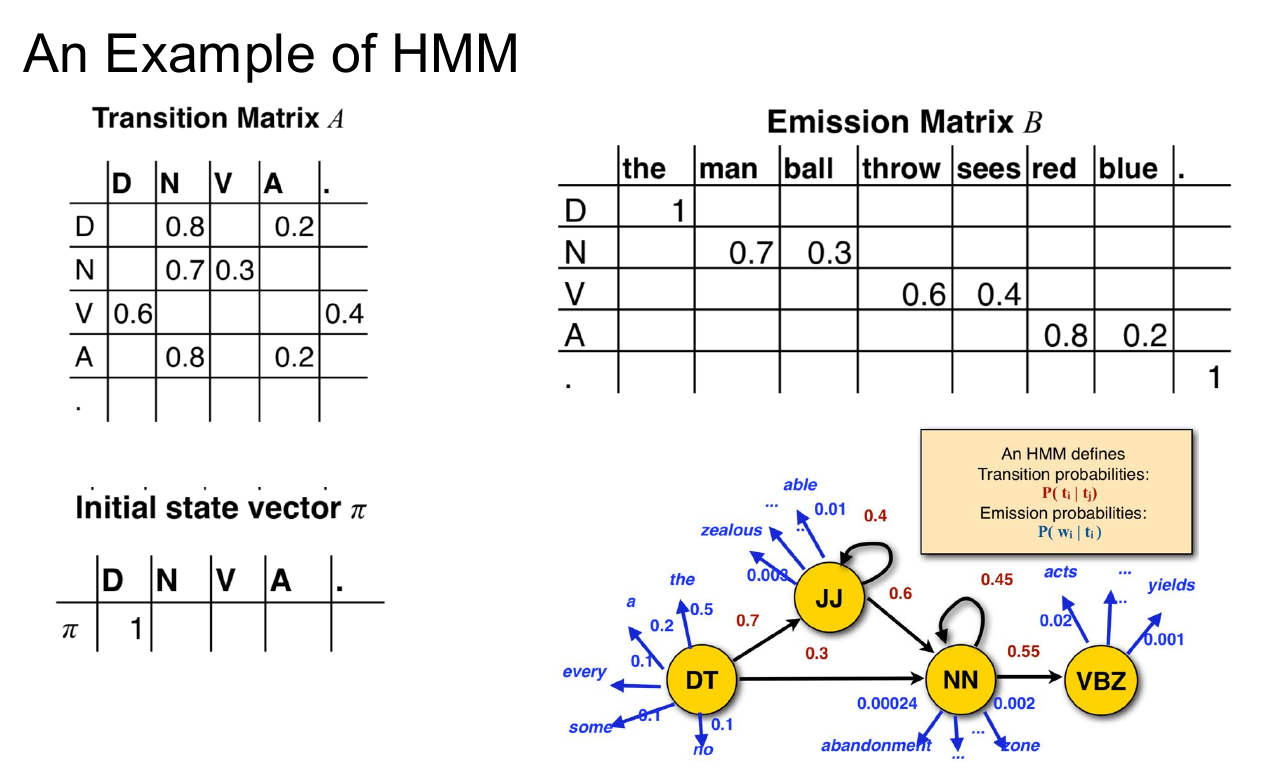

HMM for POS Tagging

Requirements:

- A set of states (POS tags)

- Observations - Observation is a word from an output vocabulary V

- An NxN state transition probability matrix

is the probability of moving from to

- Emission probability - an NxM matrix

is the probability of emitting word in state

- an initial state probability distribution

the probability of being in state at the first timestamp

Building an HMM Tagger

Need to train the model:

- Supervised - A corpus labeled with POS tags

- Estimate the parameters based on the MLE

- Unsupervised - Have a corpus, but its raw text without tags. Helps to have a dict of which POS tags each word can have

- Forward-backward algorithm

Viterbi Decoding

Find the most likely sequence of tags for the given sequence of words

- A decoding problem

Use Viterbi decoding

Evaluation:

Solves problem, evaluation metric already exist

Machine Learning

Traditional Computer Science

Given a well defined description of a problem to map a given input to its corresponding output

- Input output relations are well-defined

- E.g. sorting, shortest path, spanning trees, scheduling, etc.

The goal is to write a computer program that efficiently generates an output for a given input.

However, for some problems this is intractable, like determining whether an image of a mushroom is edible.

Data-driven problem solving

Given data on previously seen and classified edible and poisonous mushrooms, can we classify future unseen mushrooms?

What we have

- Input/output relations

What we don’t have - Knowledge on how the mapping works

Common types of problems:

Given:

- Input

Predict: - Output

(label, decision, action, etc.)

Goal

The ultimate goal of machine learning is generalization. Making future prediction on unseen examples accurate.

When to apply ML

Tasks are too complex to program

- Tasks performed by animals/humans, where there is no known program:

- Driving, speech recognition, image understanding

- Tasks beyond human capabilities:

- Turning medical archives into medical knowledge, weather prediction, web search engines

Adaptivity

- Traditional programs are static. Once written and installed, stays unchanged

- ML can adapt to new input data

- Decode handwritten text, speech recognition

Problems

- ML systems can not predict stuff it does not know about

- An ML systems trained on cats and dogs might label a moose as a dog. It would be the closest match.

Types of ML

Determined by:

- Nature of data (statistical, adversarial, benign, etc)

- Availability of outputs

- Availability of data (streaming, batch, etc)

- Interaction with environment

Supervised

Learn from desired outputs, and generalize to unseen test data

Classification - Data -> Discrete classes.

- Mushrooms image -> Edible / non-edible

- Spam filtering, object detection, predicting weather class (sunny/cloudy, etc.)

Regression - Predicting a numeric value - Study time -> Exam scores

- Stock market, predicting weather temperatures

🧪 -> Refresh the Info

Did you generally find the overall content understandable or compelling or relevant or not, and why, or which aspects of the reading were most novel or challenging for you and which aspects were most familiar or straightforward?)

Did a specific aspect of the reading raise questions for you or relate to other ideas and findings you’ve encountered, or are there other related issues you wish had been covered?)

🔗 -> Links

Resources

- Put useful links here

Connections

- Link all related words