📗 -> 06/04/25: ECS170-L28

Machine Learning Slides

DNN LLMs

🎤 Vocab

❗ Unit and Larger Context

Small summary

✒️ -> Scratch Notes

Machine Learning

Regularization

Constrain the model so it does not fit to noise

- This is done by adding penalties for large parameters

Side effects:

- Can constrain ability to fit the actual data, depending on relationship between signal/noise

This allows us to pick a complex model for simple datasets, and simply penalize large parameters

How to fit?

Add it as a term to the training error minimization loss

- Punish the square of weight terms, using euclidean norm squared

- Euclidean norm:

- Euclidean norm squared:

- Euclidean norm:

Takeaways for Model Improvement (generalization)

- We can improve performance by restricting number of parameters (simpler models).

- We can improve performance by getting more data.

- We can improve performance by regularization:

- Aggressive regularization results in simpler models, thus increasing bias and decreasing variance

- Passive regularization results in more complex models thus decreasing bias while increasing variance.

Hyperparameters

Parameters not explicitly part of model parameters

- How to find best hyperparameter?

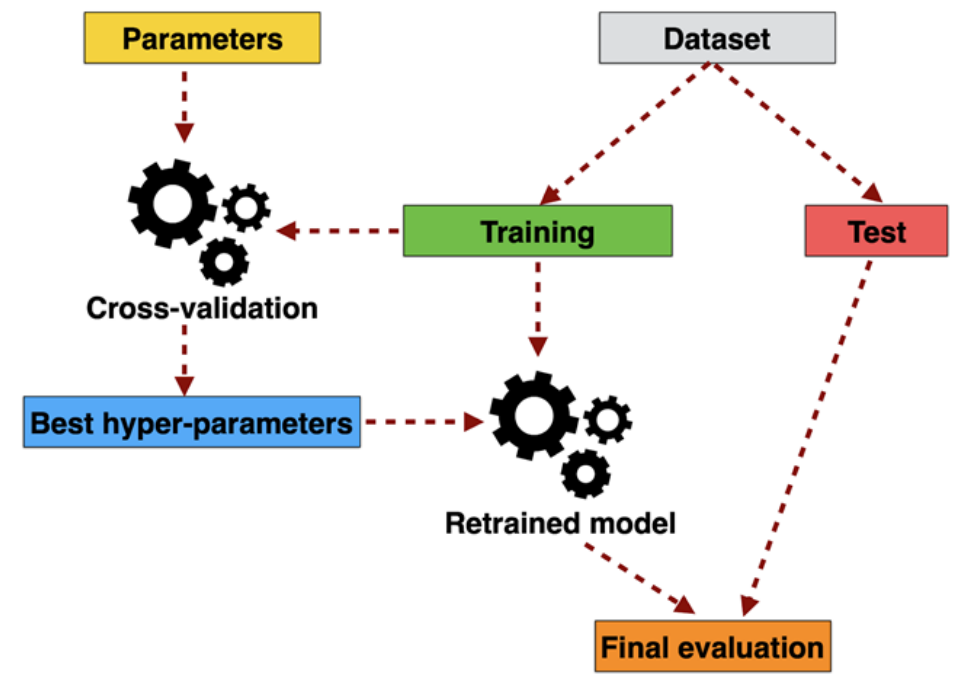

Threefold split

Have:

- Training data used for model fitting

- Validation data used for hyperparameter selection

- Test data used for evaluation

K-fold cross validation

Reassign the training data into K-folds of training and validation test data. Use all of these to optimize hyperparameters

Finish evaluation with Test Data, evaluating true performance

All Together:

DNNs and LLMs

Deep Neural Networks and Large Language Models

Development:

- Neural Networks

- Deep CNN / RNN

- Encoder-Decoder

- Attention

- Transformer

- Foundation Models

🧪 -> Refresh the Info

Did you generally find the overall content understandable or compelling or relevant or not, and why, or which aspects of the reading were most novel or challenging for you and which aspects were most familiar or straightforward?)

Did a specific aspect of the reading raise questions for you or relate to other ideas and findings you’ve encountered, or are there other related issues you wish had been covered?)

🔗 -> Links

Resources

- Put useful links here

Connections

- Link all related words