📗 -> 05/21/25: ECS189G-L22

🎤 Vocab

❗ Unit and Larger Context

Small summary

✒️ -> Scratch Notes

Transformer walk through

Attention:

If we had

If we run

Multi-head (similar to multi-channel used in CNN)

Learn seperate

in Attention Head Number 0 in Attention Head Number 1

Multi head Embedding Fusion:

- Concatenate all the attention heads into a vector

- Multiply with a weight matrix

that was trained jointly with the model - The result would be the

matrix that captures information from all the attention heads. We can send this forward to the FFNN

- For 32 heads, and 256

Z=concatenate(z0, z1, ..., z7) * WO

concatWOZ

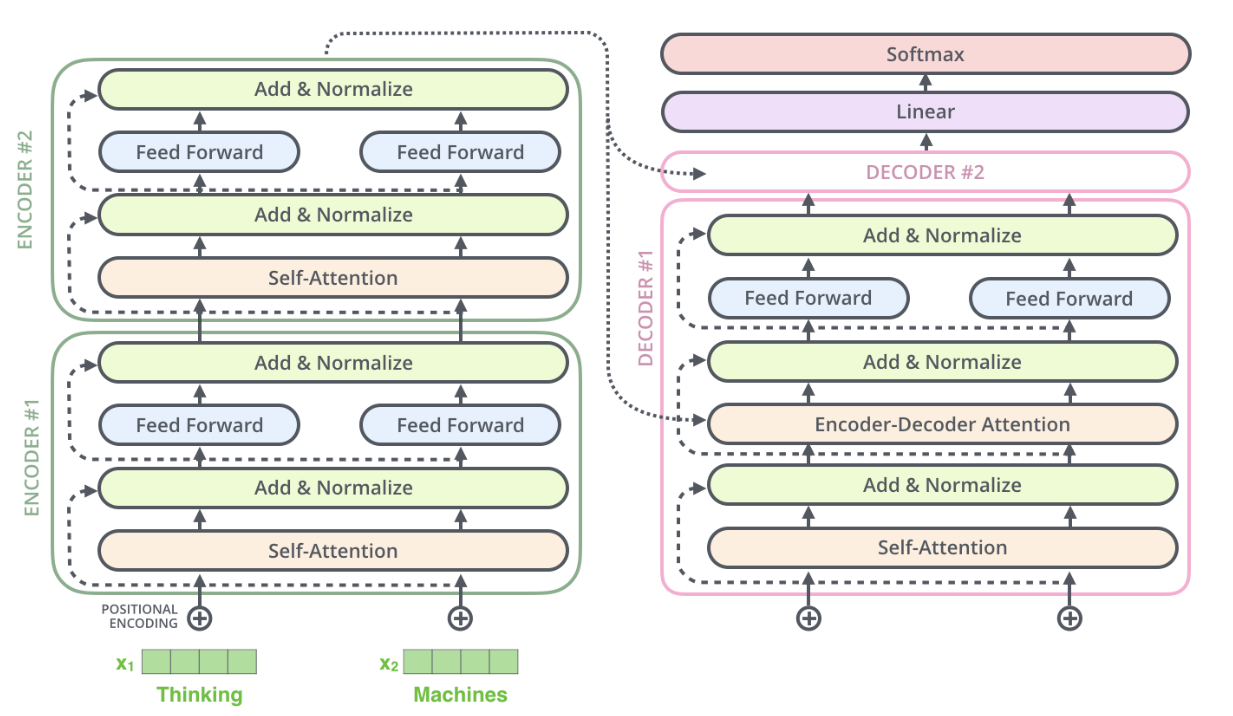

Self Attention Based Encoder

- Input Sentence

- Embed each words

- Split into 8 heads, multiply embedding X or R with weight matrices

- In all encoders other than

#0, we don’t need embedding. We start directly with the output of the encoder right below this one

- In all encoders other than

- Calculate Attention using Q/K/V matrices

- Concatenate resulting Z matrices, then multiply with weight matrix WO to produce the output of the layer

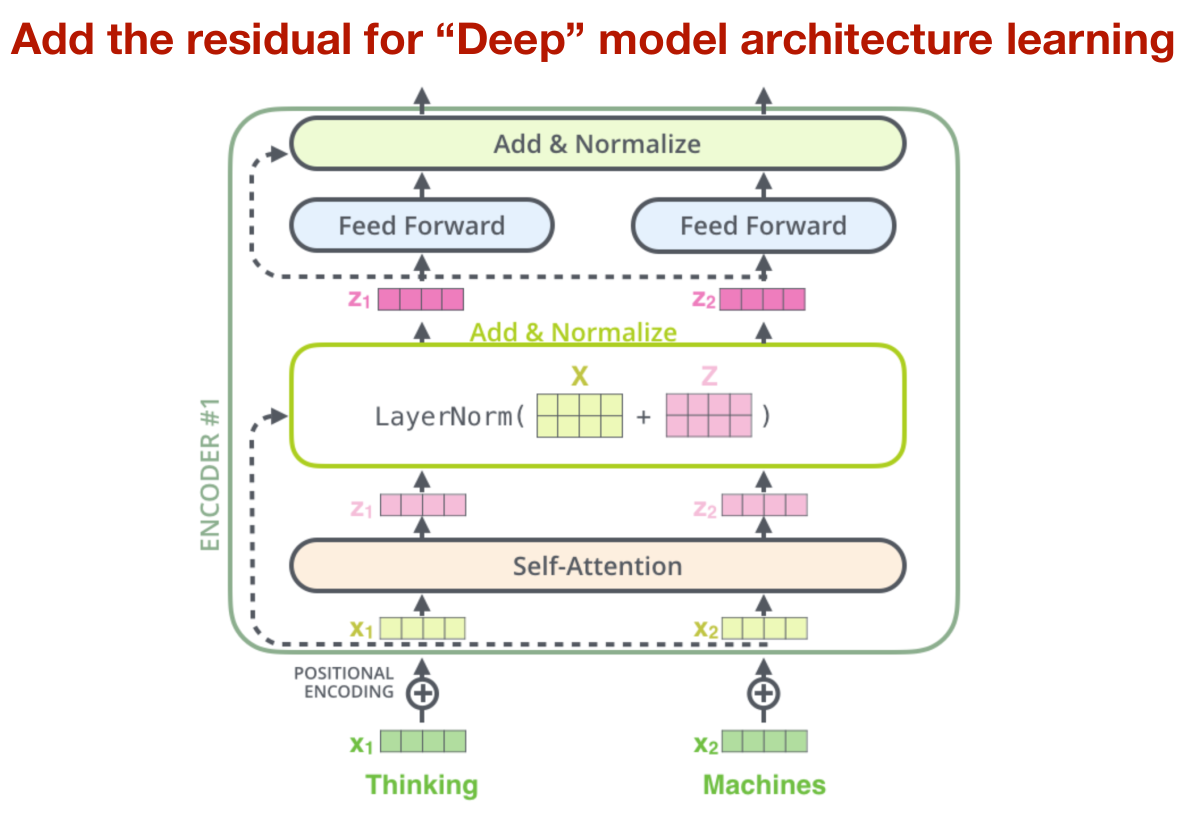

Residual for “Deep” model architecture learning

Add input x to output z, then normalize

- Normalization:

Normalization

Given

- If there is a large value, we prefer to normalize the vector

- Different norm methods:

- Min-max normalization:

- Given a vector, find the minimum and maximum values in the vector. Two scalars.

- Here min=0.1, max =100

- Normalizes x:

.

- Normalizes x:

- Given a vector, find the minimum and maximum values in the vector. Two scalars.

- Mean STD Normalization

- Find mean and STD. They are both scalars

- Layer norm does mean/std norm, finding mean and STD across layers

- Layer norm normalizes instances

- Batch norm does mean/std norm, but across columns

- Batch norm normalizes features

- Min-max normalization:

A Deep Transformer with Encoder and Decoder

Pulling all of the above together

- The encoder and decoder are actually very similar

🧪 -> Refresh the Info

Did you generally find the overall content understandable or compelling or relevant or not, and why, or which aspects of the reading were most novel or challenging for you and which aspects were most familiar or straightforward?)

Did a specific aspect of the reading raise questions for you or relate to other ideas and findings you’ve encountered, or are there other related issues you wish had been covered?)

🔗 -> Links

Resources

- Put useful links here

Connections

- Link all related words