📗 -> 06/04/25: ECS189G-L27

🎤 Vocab

❗ Unit and Larger Context

Summary of Sec_16 Graph Bert

SGC operator and its problem

- What is suspended animation problem?

GResNet with Graph Residual Learning - Graph residual terms in GResNet

- GResNet architecture and Performance

Graph-Bert - Graph-Bert overall architecture

- Sub-graph batching with PageRank

- Positional embedding

- Graph-Bert pre-training and ne-tuning

✒️ -> Scratch Notes

Starts from “A dive into graph-bert model architecture”

Step 2: Initial Embeddings for any Node

Step 3: Graph-Transformer Based Encoder for Subgraph Representation Learning

Step 4: Subgraph Representation Fusion as Target Node Final Representation

For each node in graph, define a corresponding embedding vector

- Similar to GCN architecture

Subgraph sample:

- Minibatch

Step 5: Pre-Training, Transfer, Fine-Tuning

Unsupervised Pre-training

- Node Raw attribute reconstruction (predicting ground truth with predictions from representation)

- Graph structure recovery

Fine-Tuning

- Node classification - cross entropy loss for classifcation for prediction

- Graph clustering - Distance from mean of cluster to node representation

Moving onto Final Review Slides

Final Exam

Take home, Sat+Sun (48 hrs)

Open everything, but individual

25% of grade, cumulative

1 calc question for transformer:

- Given tables (x, etc.), calculate resulting Z

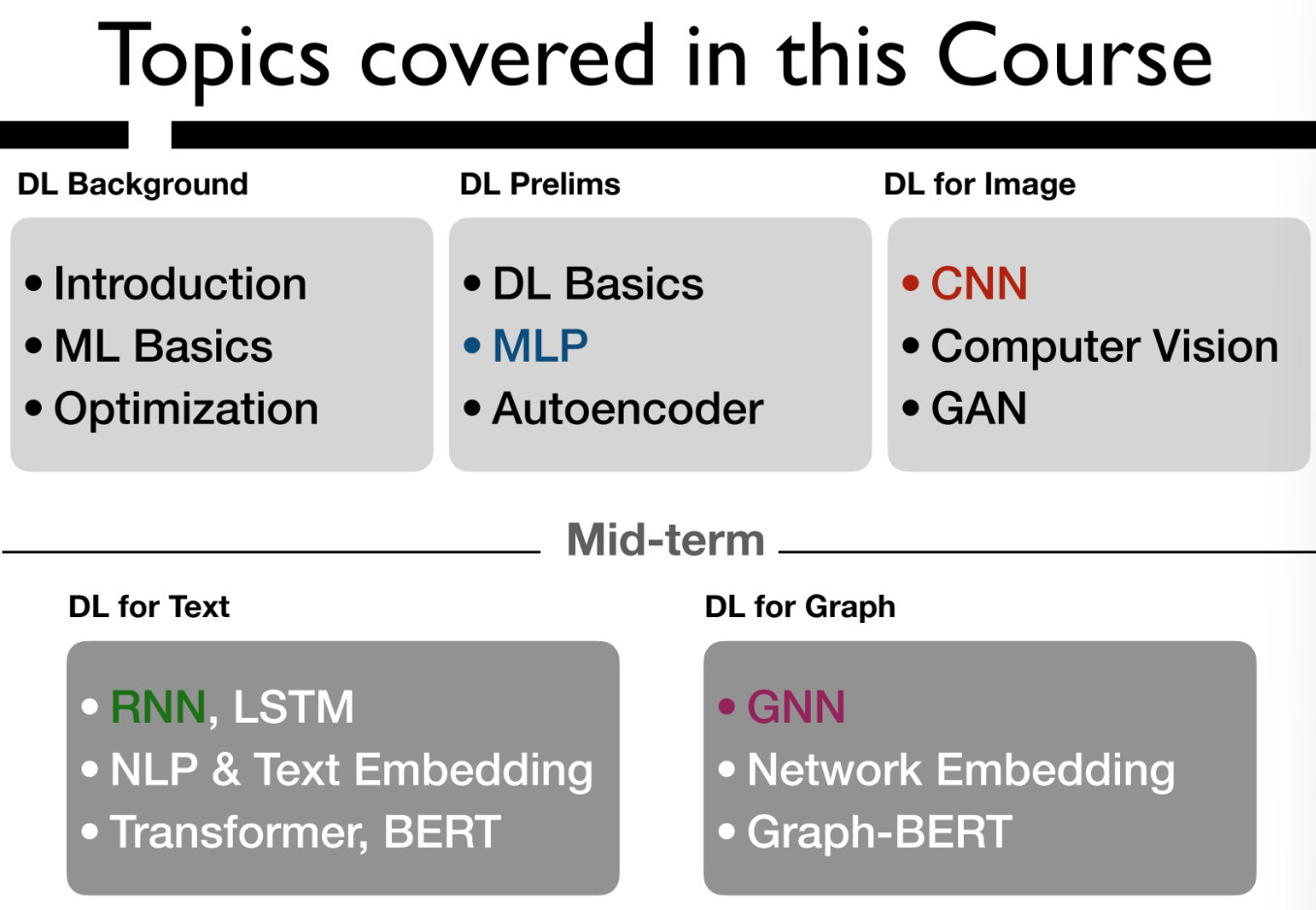

Topics Review

RNN & LSTM

Sequential data

RNN specifically, architecture

Gradient exploding/vanishing problem

LSTM

NLP & Word Embedding

NLP history

Word embeddings - specifically word2vec

text generation with RNN

Transformer & BERT

Attention

Transformer

BERT

GNN

Graph neural network intro

SGC operator and GCN

GAT

GNN Application

GNN Applications & Network Embedding

Heterogenous information networks (HIN)

GNN for heterogenous information network embedding

GNN for recommender system learning

GResNet & Graph-Bert

SGC operator and its problem

GResNet with graph REsidual LEarning

Graph-bert

What the course did and didnt

Covered:

- architecture

- Motivation

Didn’t cover: - “tricks”

- dropout

- weight decay

- batchnorm

- Xavier initialization

- Data augmentation

- etc..

Suggestions

CS changes quickly

Find an interest, an study hard on it. Self-study even

Open mind, but not swept away by hype.

Covered

Existing DL Models/Algorithms & their proposed year

- Back-propagation Algorithm (1974),

- GD, SGD, Mini-Batch SGD

- Momentum (1999), Adagrad (2011), Adam (2014)

- Perceptron (1958), Multi-Layer Perceptron (1974)

- Auto-Encoder (1986), VAE (2014)

- CNN (1998), ResNet (2015)

- GAN (2014)

- RNN (1986), Bi-RNN (1997)

- LSTM (1997), GRU (2014)

- Skip-gram (2013), CBOW (2013)

- Transformer (2017), BERT (2019)

- GCN (2017), GAT (2018)

- RGCN (2017), BPR (2009)

- GResNet (2019), Graph-Bert (2020)

🧪 -> Refresh the Info

Did you generally find the overall content understandable or compelling or relevant or not, and why, or which aspects of the reading were most novel or challenging for you and which aspects were most familiar or straightforward?)

Did a specific aspect of the reading raise questions for you or relate to other ideas and findings you’ve encountered, or are there other related issues you wish had been covered?)

🔗 -> Links

Resources

- Put useful links here

Connections

- Link all related words