Abstract:

- CNN used to study the features of objects and classify them

- LSTM (an RNN) is used since text is sequenctial

- To deal with different placements of the text in the image, Connectionist Temporal Classification (CTC) loss is employed

The model was trained with nearly 86000 samples of human handwriting and was validated with 10000 samples. After training for several epochs, the model registered 94.94% accuracy and a loss of 0.147 on training data and around 85% accuracy and a loss of 1.105 on validation data.

Section I: Intro

Convolution used

LSTM divides the image into time steps and attempts to understand where each character occurs and what it means

Since human handwriting isn’t even, LSTM would have trouble. To remedy this, we use CTC loss.

CTC attempts all possible placements of characters and takes the sum of all probabilities.

Section II: Existing System

Segmentation of words -> character segmentation -> prediction

- Not always feasible, diving characters not easy. However, strategies like this have reached 89.6% accuracy (on Devanagari numerals).

- Hidden Markov Models? (HMM)

Section III: Proposed Model

Data Pre-Processing

Greyscaling:

Trained Model

Convolutional Layers work well with max-pooling layers succeeing it

- Calculates the largest value of a feature map generated by the convolutional layer

- Only important/prominent features consider. Minor features ignored. Trains faster.

CNN

CNN uses the convolution operation to extract high-level features of an image, such as edges, and discard other unimportant low lever features

Recurrent Neural Networks and LSTM

RNNs useful when sequence in which the data occurs is important

LSTM has 3 gates viz:

- Input gate to decide whetehr to let the info into the memory

- Output gate to gecide whether to let the input affect the output at the current step

- Forget gate decies when to deem information non-essential and forget it

Gates in LSTM are a sigmoid, and in general either 0 or 1

CNN needed because:

If LSTM only is used, placement of the word in the image is significant and each character in the text label needs to be placed exactly where the character appeared in the text

Important Preprocessing

In order to generalize to other text:

- Input text has to be resized to the same length the model expects

- IAM handwriting data has to be augmented with varying lighting (since the originals all have the same lighting)

Section IIII: Experimental Analysis and Results

The following python libraries are used to implement the proposed model. Tensorflow, Open CV and Numpy. Tensorflow provides a simple interface to implement a deep learning model. Open CV is used to read images and manipulate those images accordingly. The word segmentation algorithm mainly uses Open CV and its find_countours() method to separate words from each other in the document. Numpy library is used to perform complex mathematical operations and it provides a NumPy array object which the TensorFlow model uses

Word Segmentation

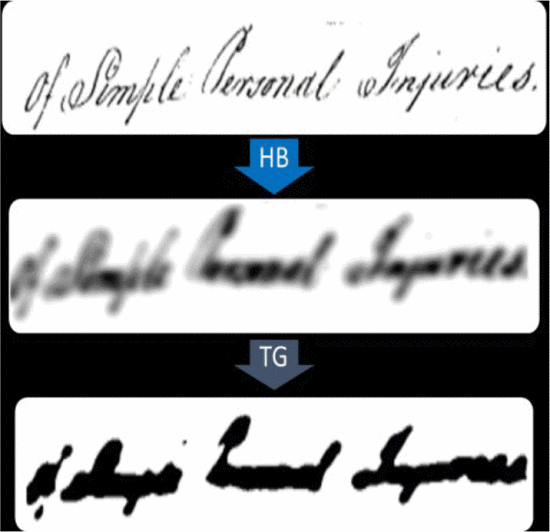

The process is as follows:

- Horizontal blurring, to combine within word contours, but no so much as to combine different words

- Images are thresholder so that values are black or white

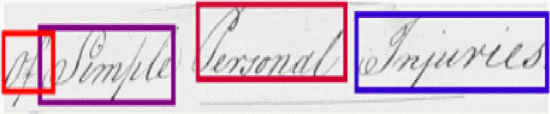

- OpenCV’s

find_contours()used to draw contours around every word - Use the counters to cut each word into its own image

Neural Networks

A model is created with tensorflow.keras.Sequential()

- Layers added:

model.add() - Convolutional layers added:

tensorflow.keras.layers.conv2d() - Max pooling added:

tensorflow.keras.layers.maxpooling2d() - Then, two layers of bidirectional LSTM:

Bidirectional(tensorflow.keras.layers.LSTM())- Activation is softmax

- Model compiled and trained with:

model.compile()model.fil()

- Saved with:

model.save() - Predicted with:

model.predict(word)

Activations:

Options

- Sigmoid:

- Tanh:

- ReLU

- Leaky ReLU

ReLU chosen. Popular for CNN, and helps to mitigate the vanishing gradient problem

Optimizer and Loss Function:

For a model to predict correctly, it is trained for multiple epochs. Optimizers and loss functions improve the model for the next epoch. CTC (Connectionist Temporal Classification) solves the problem of alignment of the characters in the image, hence this is chosen as the loss function. Adam is chosen as the optimizer.