📗 -> 10/8/24: Networks of Neurons

🎤 Vocab

❗ Unit and Larger Context

Which aspects of thought and behavior seem naturally explainable in terms of the detector model of the neuron?

- Perceiving sensory things lends easily

- Directing attention and resources and seeing more resources lends itself easier

- Active recall

- Cognitive disorders, small alterations to the model can lead to noisy detection like we saw in the lab

Which aspects of thought and behavior don’t seem naturally explainable in terms of the detector model of the neuron?

- Subconsious decisions?

- Combinations of large amounts of neuron

- Internal monologue

- Consciousness / Why is there a first person perspective?

- Non-stimulus based cognition

- Trauma responses?

✒️ -> Scratch Notes

The learning question above about feasibility of the detector model was to question how close the above can take us toward the brain-in-the-vat scenario.

Excitatory and Inhibitory Neurons

Excitatory = main info processing, 85% of neuron, long range connections

Inhibitory = local, activity regulation and competition

competitive dynamics between neurons?

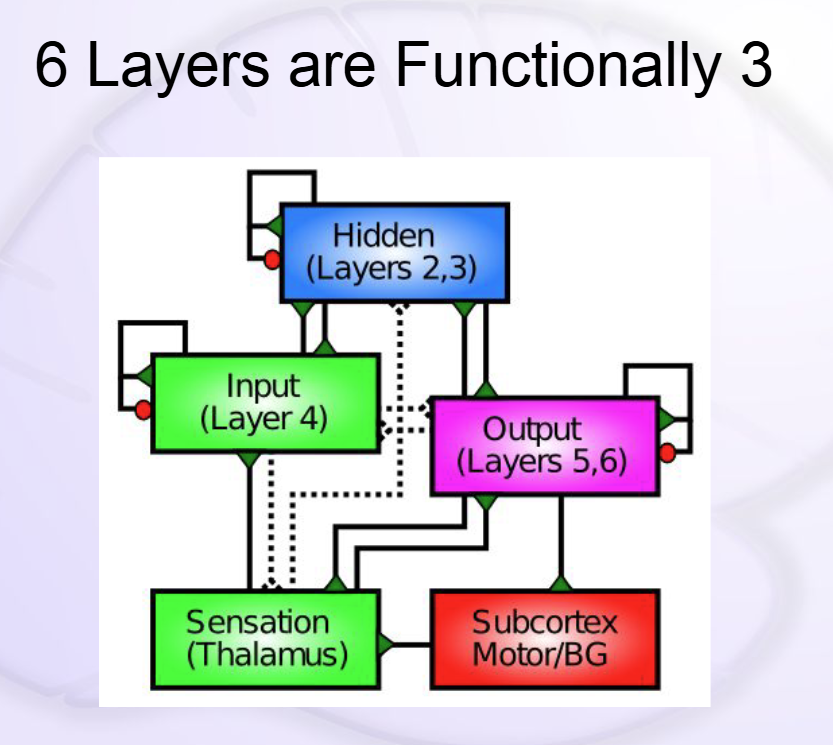

The entire cortex has 6 layers.

- A area = Exceptionally large layer 4, processes inputs coming in from thalamus and visual world

- B = Expansions of layers 2 and 3. Internal processing of information.

- C = Expansions of layers 5 and 6. Specialized for motor outputs and for acting on the world.

- D - Prefrontal cortical region = Has all players

Layer 1 = Sometimes gets included with the hidden layers (2, 3)

- Solid lines are strong connections between layers

- Dashed lines are weaker, and we don’t include them in our models

- Layers can only inhibit themselves

This is a simplified introduction

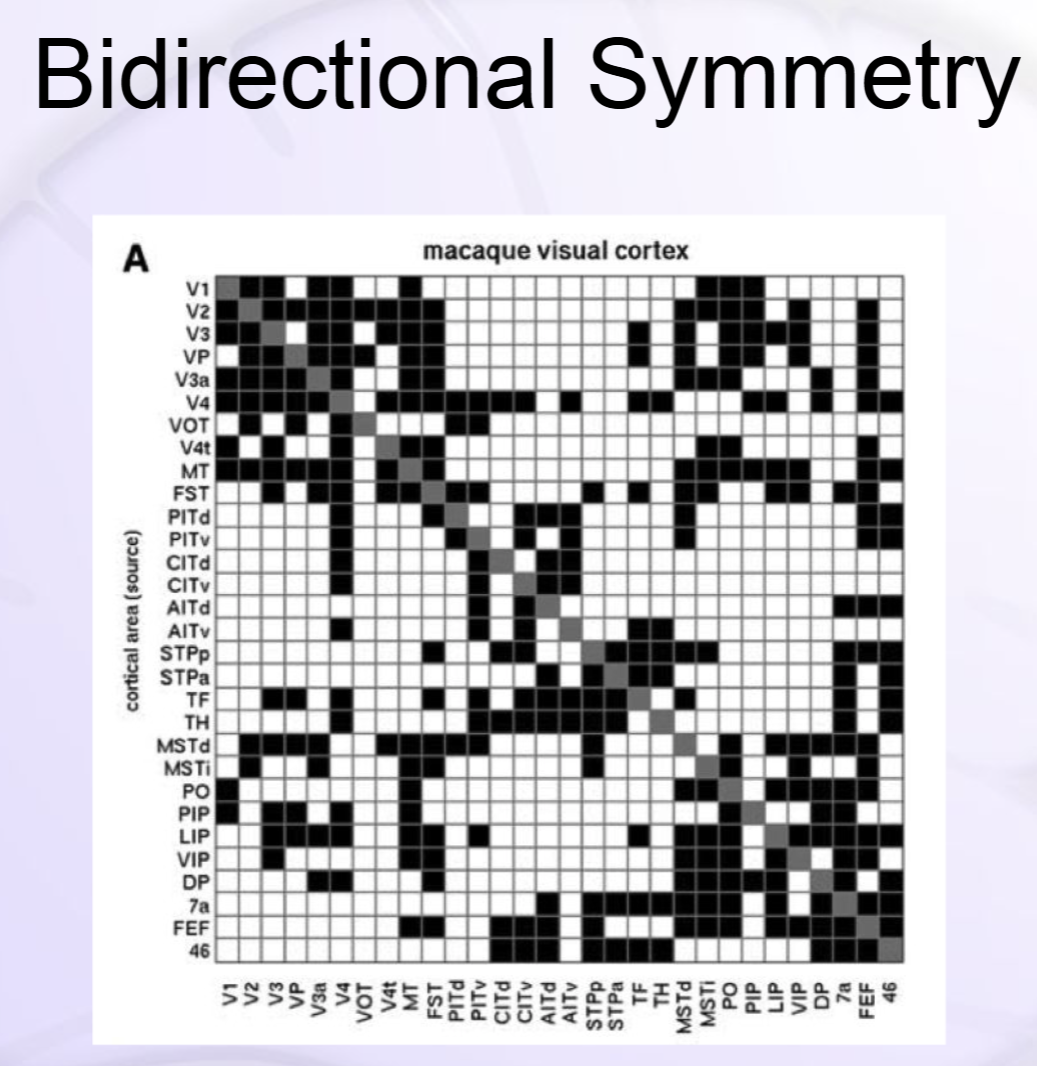

Connections are largely symmetric:

- The image above shows the strength of the connection from one area to another

Biology -> Function

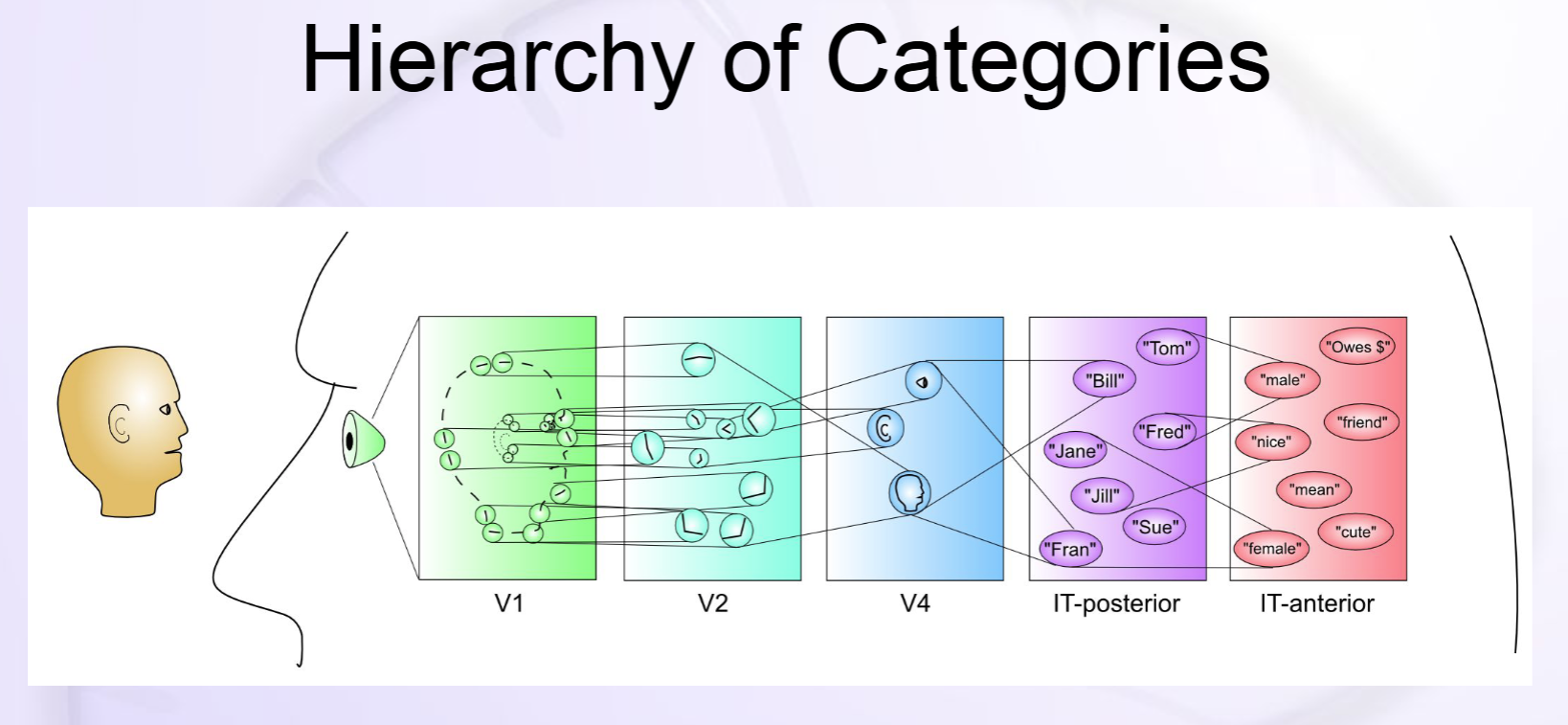

- Feedforward excitation -> Categorization

- Feedback excitation -> Attractor Dynamics

- Lateral Inhibition -> Competition, regulation

The value of neural processing lies in transforming information, not just copying it.

Detectors Naturally Perform Categorization

Categorizing things properly is at least 80% of the solution to any problem:

- Object recog

- Spoken word recog

- Idiom recog

- Relationship recog

- Person recog

- Action recog

- …

Representations

We want them to be:

- Graded

- Distributed (e.g. coarse coding)

-> - Simultaneously capture multiple meanings

- Capture similarity

- Efficient

- Simplified (e.g. stereotypes)

Coarse Coding Efficiency

🧪-> Example

- List examples of where entry contents can fit in a larger context

🔗 -> Related Word

- Link all related words