📗 -> 10/07/25: ECS171-L4

Lecture 3 slides

Lecture 4 slides

🎤 Vocab

❗ Unit and Larger Context

Small summary

✒️ -> Scratch Notes

Continuing on, starting by reviewing conditional probabilities and MLE

Logistic Regression

Motivation:

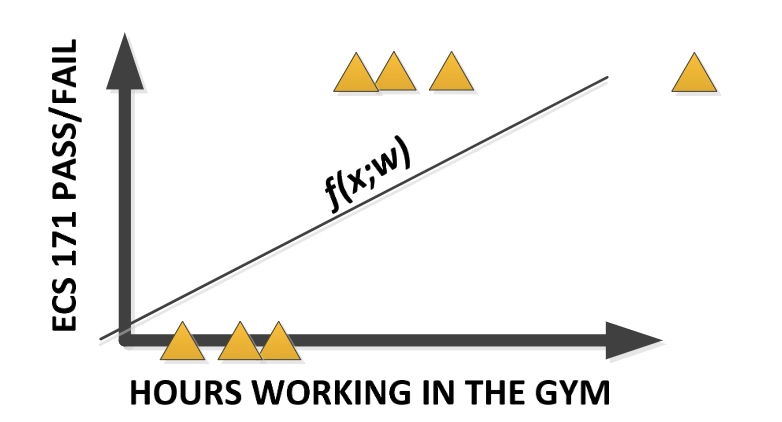

Trying to fit a linear regression to a classification problem is sensitive to outliers (line gets weighed downwards)

Instead, used logistic regression with a sigmoid/logistic function instead of a polynomial

- Multiple functions have the shape we’re looking for: the squashed ends, the saturation, the linear section:

- Hyperbolic tan, sigmoid

- Two main traits we’re interesetd in:

- Between 0 and 1 (represent probabilities)

- Nonlinear, and eases in and out

Finding Optimal Parameter/Weight set?

No closed form solution, but we can formulate it as MLE and use gradient descent

Probability of each class:

or cleanly:

🧪 -> Refresh the Info

Did you generally find the overall content understandable or compelling or relevant or not, and why, or which aspects of the reading were most novel or challenging for you and which aspects were most familiar or straightforward?)

Did a specific aspect of the reading raise questions for you or relate to other ideas and findings you’ve encountered, or are there other related issues you wish had been covered?)

🔗 -> Links

Resources

- Put useful links here

Connections

- Link all related words