Dataview:

list from [[]] and !outgoing([[]])📗 -> Explainable AI

❗ Information

Explainable AI, or XAI for short

- Prof H. Pirsiavash

A very important field of study, learning how AI makes decisions and understanding them is not just a scientific pursuit, but a practical one. A diagnosis AI is infinitely more useful if we can understand how and why it arrives at its decisions.

I’m interested in this mainly because of my interest in the brain, and developing intelligent systems. Understanding the decision-making of AI at a deeper level is the first step to improving it. To pursue this, I’m also studying the alternative angle of studying the intelligent system that is the brain. Both are massive sink holes of information, but a satisfying start is doing work in Explainable AI.

This is a massive topic of study, with a number of resources on the matter:

- https://christophm.github.io/interpretable-ml-book/preface-by-the-author.html

- https://www.reddit.com/r/MachineLearning/comments/uhm3vw/d_anyone_working_on_explanable_ai/

Cool Application here in orthopedics!

📄 -> Methodology

- Simple or full description

✒️ -> Usage

In Computer Vision

Very useful here, particularly because it lends itself to visualization and explanation

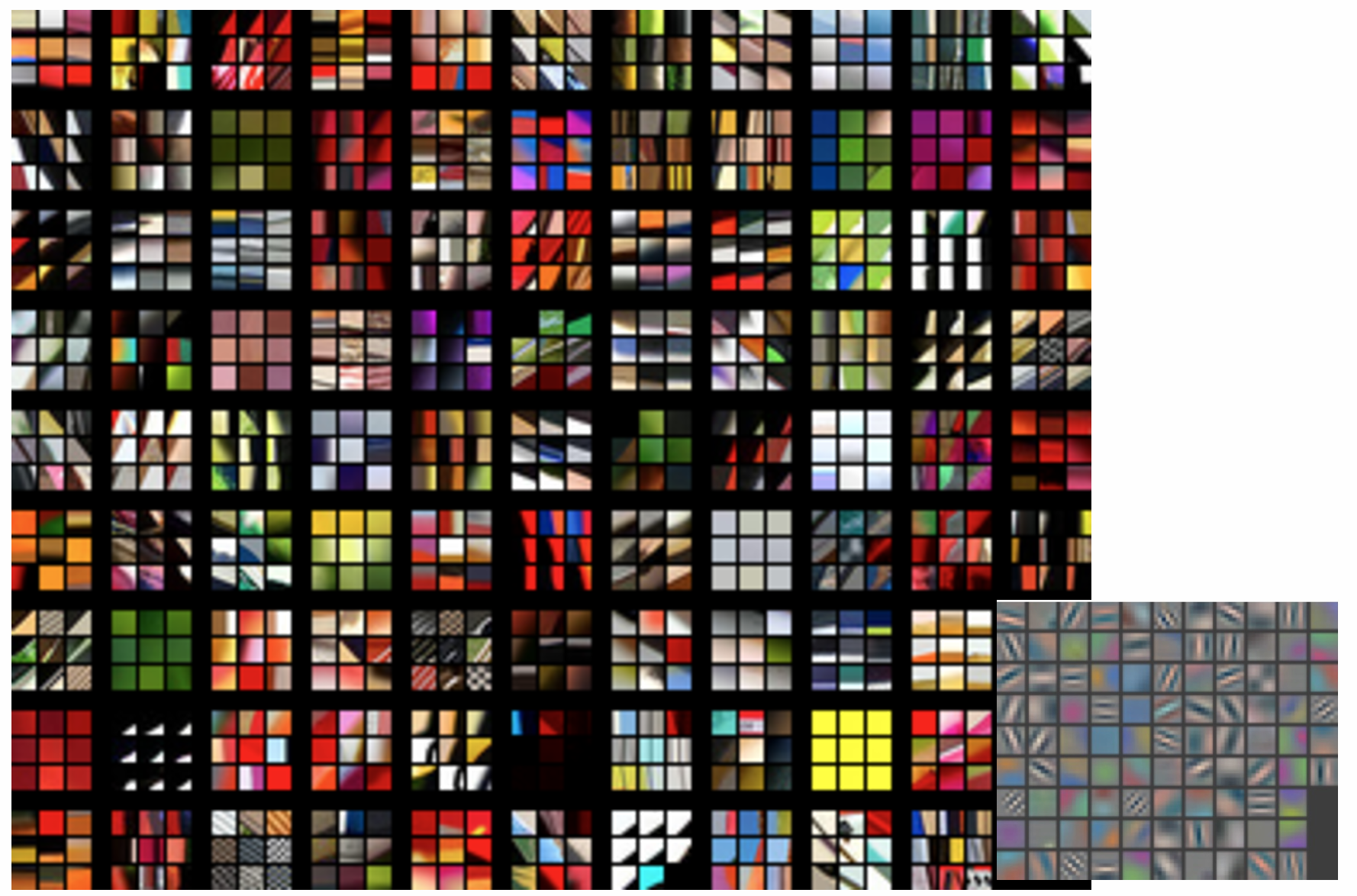

Visualizing CNNs

Cool article

Allegories to visual system, layers have more complicated features as you go on

Big ties to explainable AI, there is great value in being able to see what the network is doing under the hood

Strategies for visualizing:

- Visualizing weights, this is very useful for lower level filter extracting physical features. A good use case for this is edge detection

- Visualizing responsiveness. As you get higher leveled, the weights become harder to interpret as they respond to latent features and not direct image qualities. To address this, show each neurons most responsive images. This is done by showing top 9 images

Layer 1

Link to original

- Simple features, similar to V1 in the brain

Filters in bottom right, Top-9 shown large

🧪-> Example

- Define examples where it can be used

🔗 -> Related Word

- Link all related words